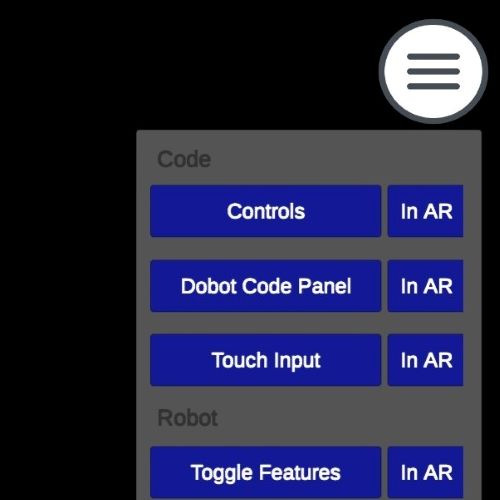

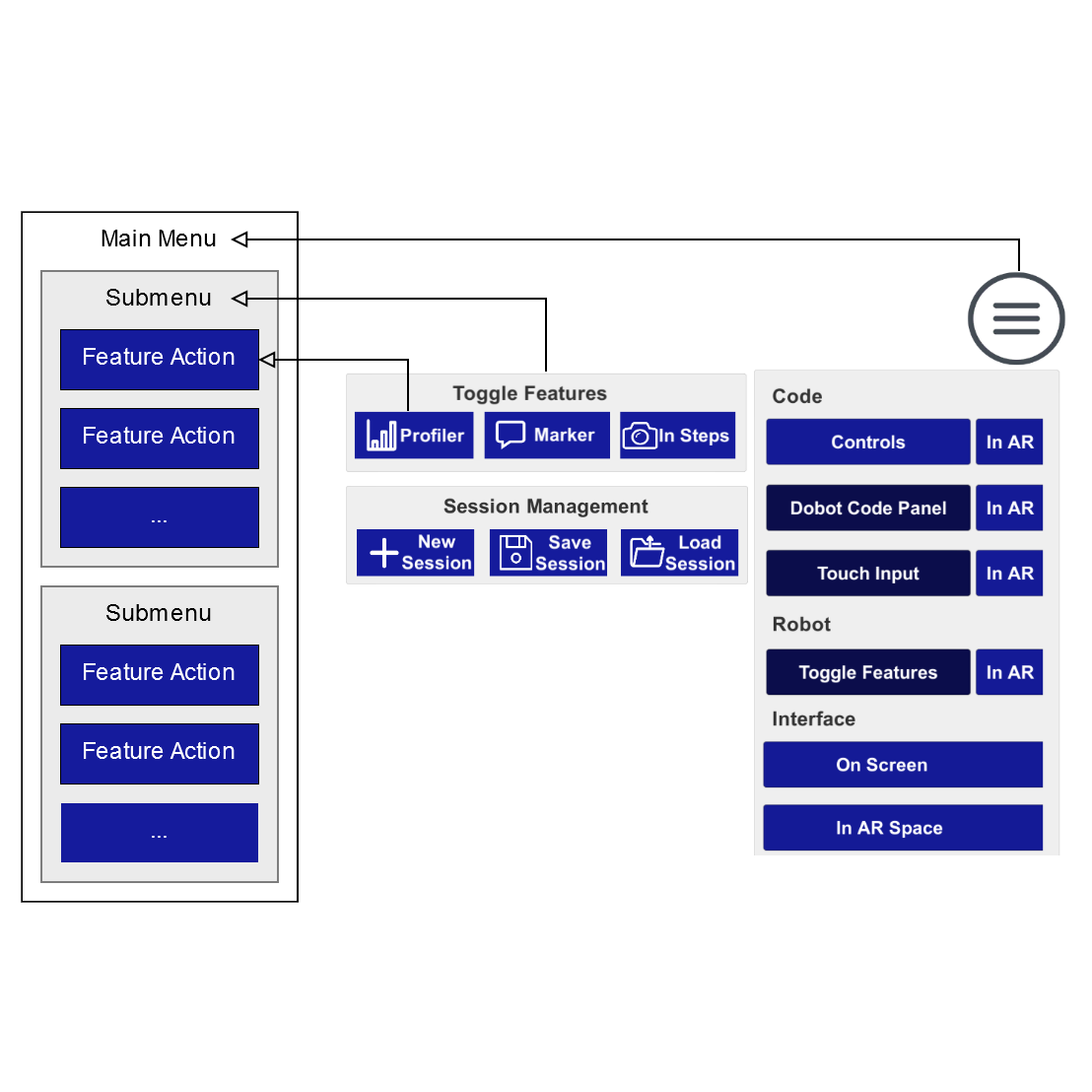

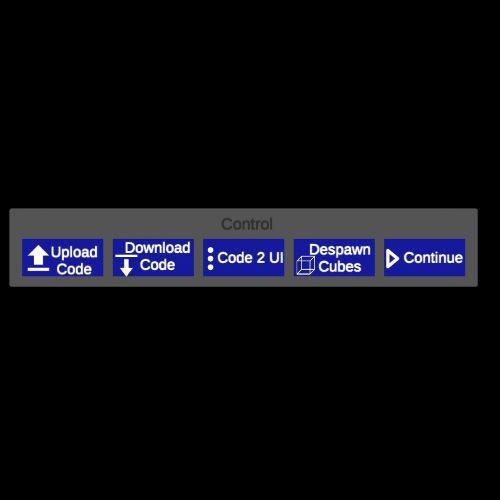

Main Menu

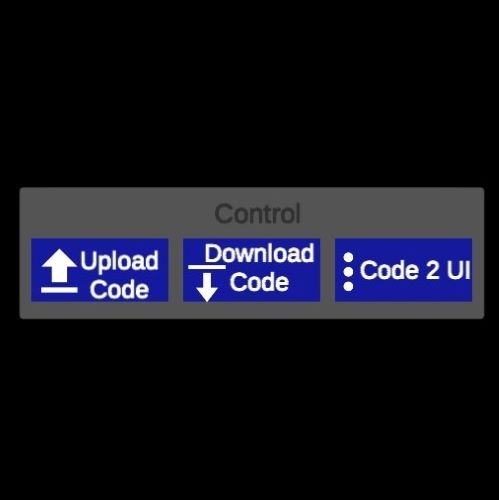

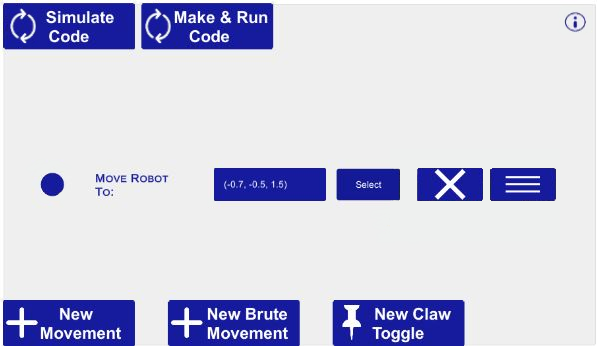

The main menu can be easily accessed by simply tapping on the icon present on the top-right corner of the device screen. It is designed to hold all submenu items which in turn will hold up all features portrayed over the AR space. Apart from that, it holds additional options too. It contains a ‘Debug’ mode button which is specially meant for running the application in debug mode and / or running mode. The main menu contains a special session management feature which enables us to save the current scene as it is and then safely navigate to other scenes as and when required without the need to restart the application all over again. This is to safely handle and operate multiple scenes presented in the application. The main menu supports a scrollable menu and maintains a safe screen occupying ratio. Each of the submenu options carries an AR toggle button which can then immediately send the sub-menu item to AR space from screen space and vice versa. This operation can be carried out collectively for all sub-menu items or individually. The sub-menu items and special functions are all categorised for ease of understandability.